June 19, 2021, Saturday. 1st day of CVPR. Virtual workshop.

Starts at 10 am Eastern Time; 4 pm Europe Time.

Held in conjunction with the IEEE Conference on Computer Vision and Pattern Recognition 2021.

Welcome to the Third International Workshop on Event-Based Vision!

Pre-recorded videos

WATCH HERE all videos! (YouTube Playlist)

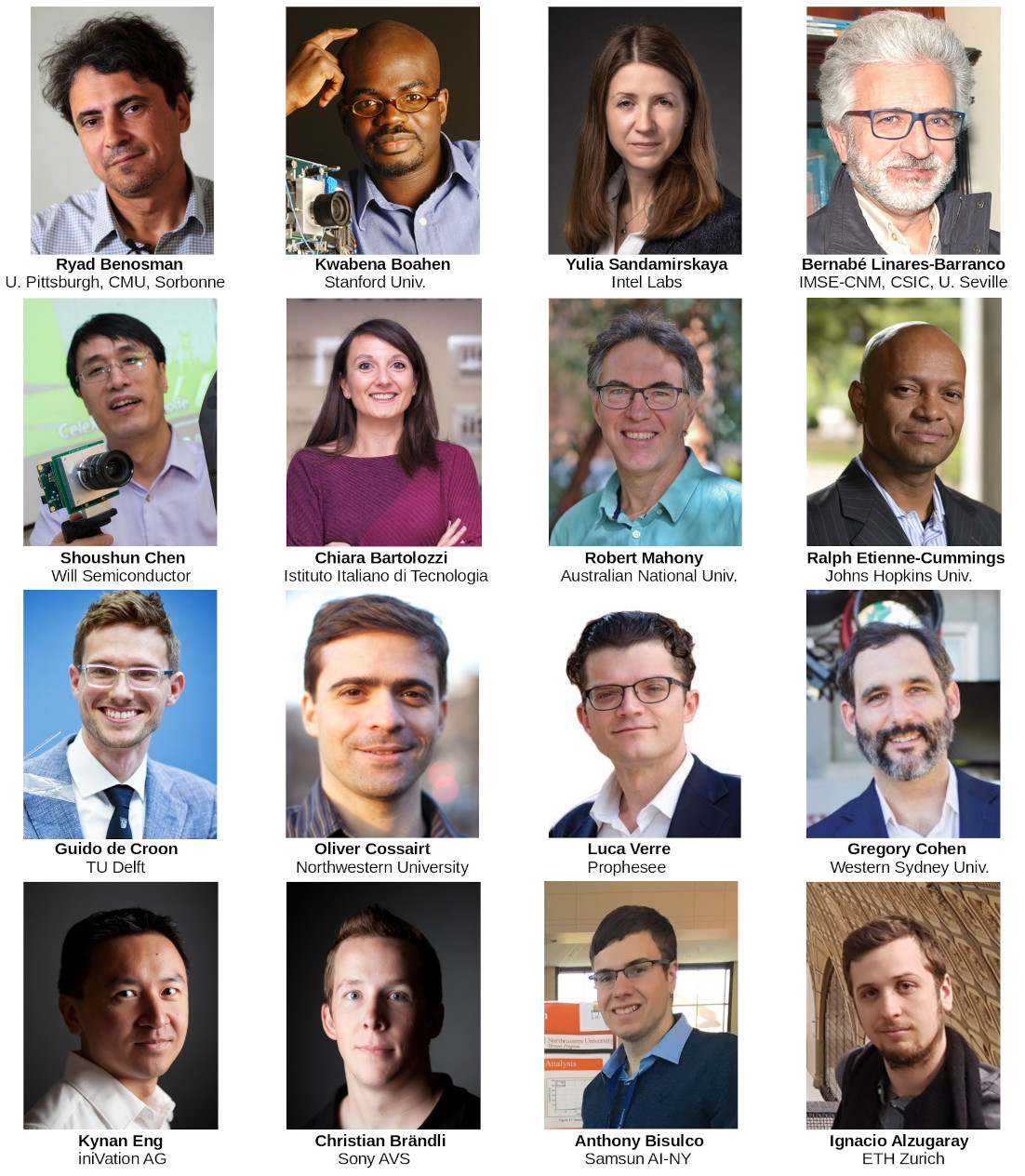

Invited Speakers

Schedule

| Time EST | SPEAKER | TITLE |

|---|---|---|

| 10:00 | Welcome and Organization, Video, Slides | |

| 10:10 | SESSION 1 | VIDEO OF LIVE SESSION |

| 10:10 | Ryad Benosman

(University of Pittsburgh, CMU, Sorbonne) |

Event Computer Vision 10 years Assessment: Where We Came From, Where We Are and Where We Are Heading To. Video, Slides |

| 10:20 | Gregory Cohen

(Western Sydney Univ.) |

Neuromorphic Vision Applications: From Robotic Foosball to Tracking Space Junk, Video |

| 10:30 | Robert Mahony

(Australian National Univ.) |

Fusing Frame and Event data for High Dynamic Range Video. Video, Slides, Paper |

| 10:40 | Kynan Eng

(CEO of iniVation) |

High-Performance Neuromorphic Vision: From Core Technologies to Applications, Video |

| 10:50 | Panel discussion | |

| 11:10 | SESSION 2 | VIDEO OF LIVE SESSION |

| 11:10 | Bernabé Linares-Barranco

(IMSE-CNM, CSIC and Univ. Seville) |

Event-driven convolution based processing. Video, Slides |

| 11:20 | Kwabena Boahen

(Stanford) |

Routing Events in Two-Dimensional Arrays with a Tree. Video, Slides |

| 11:30 | Ralph Etienne-Cummings

(Johns Hopkins Univ.) |

Learning Spatiotemporal Filters to Track Event-Based Visual Saliency. Video, Slides |

| 11:40 | Ignacio Alzugaray

(ETH Zurich) |

Towards Asynchronous SLAM with Event Cameras. Video, Slides |

| 11:50 | Panel discussion | |

| 12:10 | INTERMISSION | |

| 12:10 | Mathias Gehrig | DSEC competition, VIDEO OF LIVE SESSION |

| 12:20 | Award Ceremony, VIDEO OF LIVE SESSION | |

| 12:30 | Poster session of accepted papers and courtesy presentations | |

| Yi Zhou (HKUST) |

Event-based Visual Odometry: A Short Tutorial. Video, Slides | |

| S. Tulyakov (Huawei)

and D. Gehrig (UZH) |

Time Lens: Event-based Video Frame Interpolation. Video, Slides | |

| Federico Paredes-Vallés (TU Delft) |

Back to Event Basics: Self-Supervised Learning of Image Reconstruction for Event Cameras via Photometric Constancy. Video, Slides | |

| Daqi Liu (U. Adelaide) |

Spatiotemporal Registration for Event-based Visual Odometry. Video | |

| Cornelia Fermüller (U. Maryland) |

0-MMS: Zero-Shot Multi-Motion Segmentation With A Monocular Event Camera. Video | |

| Cornelia Fermüller (U. Maryland) |

EVPropNet: Detecting Drones By Finding Propellers For Mid-Air Landing And Following. Video | |

| Joe Maljian (Oculi, Inc) |

Oculi products portfolio. Video, Slides | |

| 14:00 | SESSION 3 | VIDEO OF LIVE SESSION |

| 14:00 | Yulia Sandamirskaya

(Intel Labs) |

Neuromorphic computing hardware and event based vision: a perfect match? Video |

| 14:10 | Anthony Bisulco, Daewon Lee, Daniel D. Lee, Volkan Isler

(Samsung AI Center NY) |

High Speed Perception-Action Systems with Event-Based Cameras. Video, Slides |

| 14:20 | Guido de Croon

(TU Delft, Netherlands) |

Event-based vision and processing for tiny drones. Video, Slides |

| 14:30 | Chiara Bartolozzi

(IIT, Italy) |

Neuromorphic vision for humanoid robots. Video |

| 14:40 | Panel discussion | |

| 15:00 | SESSION 4 | VIDEO OF LIVE SESSION |

| 15:00 | Luca Verre

(Co-founder and CEO of Prophesee) |

From the lab to the real world: event-based vision evolves as a commercial force. Video, Slides |

| 15:10 | Oliver Cossairt

(Northwestern Univ.) |

Hardware and Algorithm Co-design with Event Sensors. Video, Slides |

| 15:20 | Shoushun Chen

(Founder of CelePixel. Will Semiconductor) |

Development of Event-based Sensor and Applications. Video, Slides |

| 15:30 | Christian Brändli

(CEO of Sony AVS) |

Event-Based Computer Vision At Sony Advanced Visual Sensing. Video, Slides |

| 15:40 | Panel discussion |

Accepted Papers

- v2e: From Video Frames to Realistic DVS Events, and Suppl mat, Video

- Differentiable Event Stream Simulator for Non-Rigid 3D Tracking, and Suppl mat. Video, Slides

- Comparing Representations in Tracking for Event Camera-based SLAM. Video, Slides

- Image Reconstruction from Neuromorphic Event Cameras using Laplacian-Prediction and Poisson Integration with Spiking and Artificial Neural Networks, Video, Slides

- Detecting Stable Keypoints from Events through Image Gradient Prediction. Video, Slides

- EFI-Net: Video Frame Interpolation from Fusion of Events and Frames, and Suppl. mat Video, Slides

- DVS-OUTLAB: A Neuromorphic Event-Based Long Time Monitoring Dataset for Real-World Outdoor Scenarios, Video

- N-ROD: a Neuromorphic Dataset for Synthetic-to-Real Domain Adaptation, Video, Poster

- Lifting Monocular Events to 3D Human Poses. Video, Slides

- A Cortically-inspired Architecture for Event-based Visual Motion Processing: From Design Principles to Real-world Applications. Video, Slides

- Spike timing-based unsupervised learning of orientation, disparity, and motion representations in a spiking neural network, and Suppl mat. Video, Slides

- Feedback control of event cameras. Video

- How to Calibrate Your Event Camera. Project page, Video

- Live Demonstration: Incremental Motion Estimation for Event-based Cameras by Dispersion Minimisation

Reviewer Acknowledgement

We thank our reviewers for a thorough review process.

Objectives

This workshop is dedicated to event-based cameras, smart cameras, and algorithms processing data from these sensors. Event-based cameras are bio-inspired sensors with the key advantages of microsecond temporal resolution, low latency, very high dynamic range, and low power consumption. Because of these advantages, event-based cameras open frontiers that are unthinkable with standard frame-based cameras (which have been the main sensing technology of the past 60 years). These revolutionary sensors enable the design of a new class of algorithms to track a baseball in the moonlight, build a flying robot with the agility of a fly, and perform structure from motion in challenging lighting conditions and at remarkable speeds. These sensors became commercially available in 2008 and are slowly being adopted in computer vision and robotics. In recent years they have received attention from large companies, e.g. the event-sensor company Prophesee collaborated with Intel and Bosch on a high spatial resolution sensor, Samsung announced mass production of a sensor to be used on hand-held devices, and they have been used in various applications on neuromorphic chips such as IBM’s TrueNorth and Intel’s Loihi. The workshop also considers novel vision sensors, such as pixel processor arrays (PPAs), that perform massively parallel processing near the image plane. Because early vision computations are carried out on-sensor, the resulting systems have high speed and low-power consumption, enabling new embedded vision applications in areas such as robotics, AR/VR, automotive, gaming, surveillance, etc. This workshop will cover the sensing hardware, as well as the processing and learning methods needed to take advantage of the above-mentioned novel cameras.

Topics Covered

- Event-based / neuromorphic vision.

- Algorithms: visual odometry, SLAM, 3D reconstruction, optical flow estimation, image intensity reconstruction, recognition, stereo depth reconstruction, feature/object detection, tracking, calibration, sensor fusion (video synthesis, visual-inertial odometry, etc.).

- Model-based, embedded, or learning approaches.

- Event-based signal processing, representation, control, bandwidth control.

- Event-based active vision, event-based sensorimotor integration.

- Event camera datasets and/or simulators.

- Applications in: robotics (navigation, manipulation, drones…), automotive, IoT, AR/VR, space science, inspection, surveillance, crowd counting, physics, biology.

- Near-focal plane processing, such as pixel processor arrays - PPAs (e.g., SCAMP sensor).

- Biologically-inspired vision and smart cameras.

- Novel hardware (cameras, neuromorphic processors, etc.) and/or software platforms.

- New trends and challenges in event-based and/or biologically-inspired vision (SNNs, etc.).

- Event-based vision for computational photography.

- A longer list of related topics is available in the table of content of the List of Event-based Vision Resources

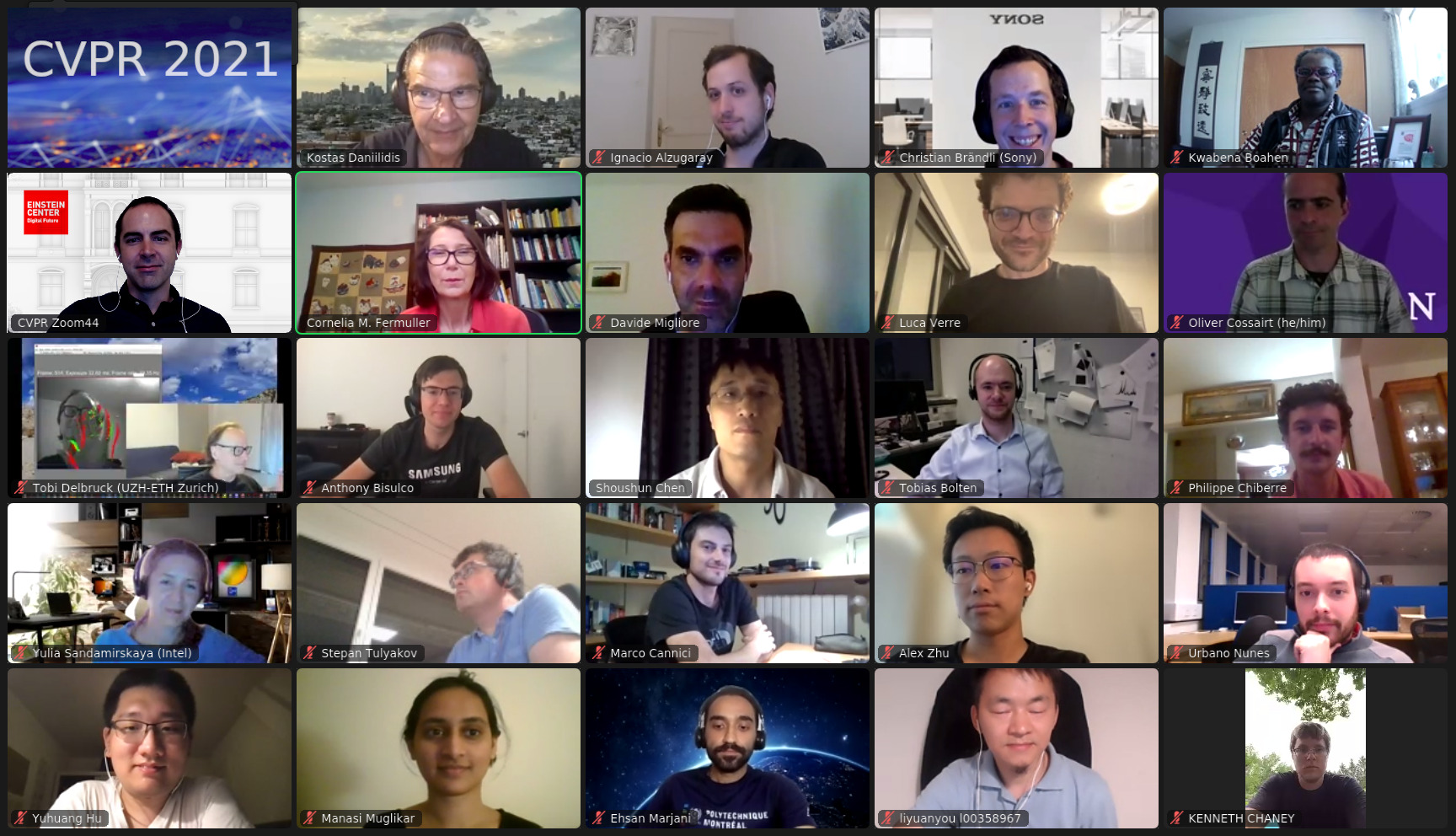

Organizers

- Guillermo Gallego, Technische Universität Berlin and Einstein Center Digital Future, Germany.

- Davide Scaramuzza, University of Zurich, Switzerland.

- Kostas Daniilidis, University of Pennsylvania, USA.

- Cornelia Fermüller, University of Maryland, USA.

- Davide Migliore, Prophesee, France.

Sponsor

This workshop is sponsored by event camera manufacturer

FAQs

- What is an event camera? Watch this video explanation.

- What are possible applications of event cameras? Check the TPAMI 2020 review paper.

- Where can I buy an event camera? From Inivation, Prophesee, CelePixel, Insightness.

- Are there datasets and simulators that I can play with? Yes, Dataset. Simulator. More.

- Is there any online course about event-based vision? Yes, check this course at TU Berlin.

- What is the SCAMP sensor? Read this page explanation.

- What are possible applications of the scamp sensor? Some applications can be found here.

- Where can I buy a SCAMP sensor? It is not commercially available. Contact Prof. Piotr Dudek.

- Where can I find more information? Check out this List of Event-based Vision Resources.

Upcoming Related Workshops

- 2021 Telluride Neuromorphic workshop. Starting on Sunday June 27th.

Register for free - ICCV 2021 Tutorial. Introduction to Event Detection Cameras.

Past Related Workshops

- ICRA 2021 Workshop On- and Near-sensor Vision Processing, from Photons to Applications (ONSVP).

- ICRA 2020 Workshop on Sensing, Estimating and Understanding the Dynamic World. Session on Event-based camera companies iniVation and Prophesee.

- CVPR 2019 Second International Workshop on Event-based Vision and Smart Cameras.

- IROS 2018 Workshop on Unconventional Sensing and Processing for Robotic Visual Perception.

- ICRA 2017 First International Workshop on Event-based Vision.

- ICRA 2015 Workshop on Innovative Sensing for Robotics, with focus on Neuromorphic Sensors.

- Event-Based Vision for High-Speed Robotics (slides) IROS 2015, Workshop on Alternative Sensing for Robot Perception.

- The Telluride Neuromorphic Cognition Engineering Workshops.

- Capo Caccia Workshops toward Cognitive Neuromorphic Engineering.

Ack

The Microsoft CMT service was used for managing the peer-reviewing process for this conference. This service was provided for free by Microsoft and they bore all expenses, including costs for Azure cloud services as well as for software development and support.