June 19, 2023, Monday. 2nd day of CVPR, Vancouver, Canada.

Starts at 8 am Local time; 4 pm Europe Time.

Held in conjunction with the IEEE Conference on Computer Vision and Pattern Recognition 2023.

Welcome to the 4th International Workshop on Event-Based Vision!

Many thanks to all who contributed and made this workshop possible!

Many thanks to all who contributed and made this workshop possible!

Photo Album of the Workshop

Videos! YouTube Playlist

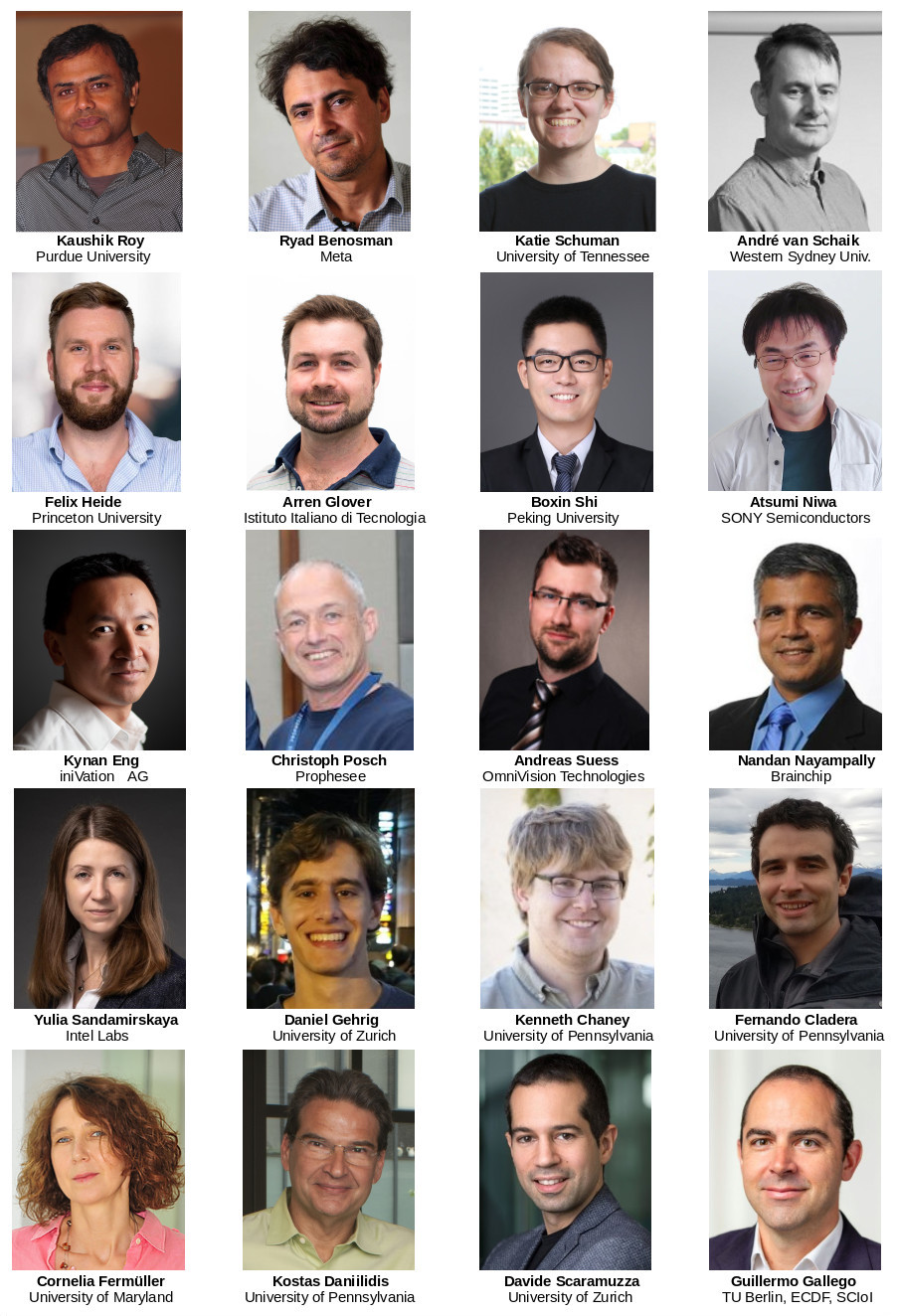

Speakers

Schedule (starts at 8 am Local time)

| Time | Speaker and Title |

| 08:00 | SESSION 1 |

| 08:00 | Welcome and Organization. Video, Slides |

| 08:05 | Kaushik Roy (Purdue University). Re-thinking Computing with Neuro-Inspired Learning: Algorithms, Sensors, and Hardware Architecture. Video, Slides |

| 08:35 | Ryad Benosman (Meta). The Interplay Between Events and Frames: A Comprehensive Explanation. Video, Slides |

| 09:05 | Katie Schuman (University of Tennessee). A Workflow for Low-Power Neuromorphic Computing for Event-Based Vision Applications. Video |

| 09:35 | Andre van Schaik (Western Sydney University). Applications of Event-based Vision at the International Centre for Neuromorphic Systems. Video |

| 10:00 | Coffee break. Set up posters |

| 10:30 | SESSION 2 |

| 10:30 | Poster session: contributed papers, demos and courtesy papers (as posters). See links below. Boards 1 -- 49. |

| 12:30 | Lunch break |

| 13:30 | SESSION 3 |

| 13:30 | Felix Heide (Princeton University). Neural Nanophotonic Cameras. Video |

| 14:00 | Arren Glover (Italian Institute of Technology). Real-time, Speed-invariant, Vision for Robotics. Video, Slides |

| 14:30 | NeuroPAC. Video |

| 14:35 | Cornelia Fermüller (University of Maryland). When do neuromorphic sensors outperform cameras? Learning from dynamic features. Video, Slides |

| 14:40 | Daniel Gehrig (Scaramuzza's Lab, University of Zurich). Efficient event processing with geometric deep learning. Video, Slides |

| 14:45 | Guillermo Gallego (TU Berlin, ECDF, SCIoI). Event-based Robot Vision for Autonomous Systems and Animal Observation. Video, Slides |

| 14:50 | Kenneth Chaney and Fernando Cladera (Daniilidis' Lab, University of Pennsylvania). M3ED: Multi-robot, Multi-Sensor, Multi-Environment Event Dataset. Video |

| 15:00 | Boxin Shi (Peking University). NeuCAP: Neuromorphic Camera Aided Photography. Video, Slides |

| 15:30 | Coffee break |

| 16:00 | SESSION 4 |

| 16:00 | Yulia Sandamirskaya and Andreas Wild (Intel Labs). Visual Processing with Loihi 2. Video, Slides |

| 16:20 | Kynan Eng (iniVation). Beyond Frames and Events: Next Generation Visual Sensing. Video |

| 16:40 | Atsumi Niwa (SONY). Event-based Vision Sensor and On-chip Processing Development. Video, Slides |

| 17:00 | Andreas Suess (OmniVision Technologies). Towards hybrid event/image vision. Video |

| 17:20 | Nandan Nayampally (Brainchip). Enabling Ultra-Low Power Edge Inference and On-Device Learning with Akida. Video |

| 17:35 | Christoph Posch (Prophesee). Event sensors for embedded AI vision applications. Video |

| 17:50 | Award Ceremony. Video, Slides |

Proceedings at The Computer Vision Foundation (CVF)

List of accepted papers and live demos

- M3ED: Multi-Robot, Multi-Sensor, Multi-Environment Event Dataset, Dataset, Code

- Asynchronous Events-based Panoptic Segmentation using Graph Mixer Neural Network, and Suppl mat, Code and data

- Event-IMU fusion strategies for faster-than-IMU estimation throughput, and Suppl mat

- Fast Trajectory End-Point Prediction with Event Cameras for Reactive Robot Control, and Suppl mat, Code

- Exploring Joint Embedding Architectures and Data Augmentations for Self-Supervised Representation Learning in Event-Based Vision, and Suppl mat, Poster Code

- Event-based Blur Kernel Estimation For Blind Motion Deblurring

- Neuromorphic Event-based Facial Expression Recognition, Dataset

- Low-latency monocular depth estimation using event timing on neuromorphic hardware

- Frugal event data: how small is too small? A human performance assessment with shrinking data, Poster

- Flow cytometry with event-based vision and spiking neuromorphic hardware, Code, Poster

- How Many Events Make an Object? Improving Single-frame Object Detection on the 1 Mpx Dataset, and Suppl mat, Code

- EVREAL: Towards a Comprehensive Benchmark and Analysis Suite for Event-based Video Reconstruction, and Suppl mat, Code, Poster

- X-maps: Direct Depth Lookup for Event-based Structured Light Systems, and Poster, Code

- PEDRo: an Event-based Dataset for Person Detection in Robotics, Dataset

- Density Invariant Contrast Maximization for Neuromorphic Earth Observations, and Suppl mat, Code, Poster

- Entropy Coding-based Lossless Compression of Asynchronous Event Sequences, and Suppl mat, Poster

- MoveEnet: Online High-Frequency Human Pose Estimation with an Event Camera, and Suppl mat, Code

- Predictive Coding Light: learning compact visual codes by combining excitatory and inhibitory spike timing-dependent plasticity

- Neuromorphic Optical Flow and Real-time Implementation with Event Cameras, and Suppl mat, Poster, Video

- HUGNet: Hemi-Spherical Update Graph Neural Network applied to low-latency event-based optical flow, and Suppl mat

- End-to-end Neuromorphic Lip-reading, Poster

- Sparse-E2VID: A Sparse Convolutional Model for Event-Based Video Reconstruction Trained with Real Event Noise, and Suppl mat, Video

- Interpolation-Based Event Visual Data Filtering Algorithms, Code

- Within-Camera Multilayer Perceptron DVS Denoising, Suppl mat, Code, Poster

- Shining light on the DVS pixel: A tutorial and discussion about biasing and optimization, Tool

- PDAVIS: Bio-inspired Polarization Event Camera, Suppl mat, Code

- Live Demonstration: E2P–Events to Polarization Reconstruction from PDAVIS Events, and Suppl mat, Code

- Live Demonstration: PINK: Polarity-based Anti-flicker for Event Cameras, Video

- Live Demonstration: Event-based Visual Microphone, and Suppl mat

- Live Demonstration: SCAMP-7

- Live Demonstration: ANN vs SNN vs Hybrid Architectures for Event-based Real-time Gesture Recognition and Optical Flow Estimation

- Live Demonstration: Tangentially Elongated Gaussian Belief Propagation for Event-based Incremental Optical Flow Estimation, Code

- Live Demonstration: Real-time Event-based Speed Detection using Spiking Neural Networks

- Live Demonstration: Integrating Event-based Hand Tracking Into TouchFree Interactions, and Suppl mat

List of courtesy papers (as posters, during session #2)

- Event Collapse in Motion Estimation using Contrast Maximization, by Shintaro Shiba, from Keio University and TU Berlin. Poster

- Deep Asynchronous Graph Neural Networks for Events and Frames, by Daniel Gehrig, from the University of Zurich.

- Animal behavior observation with event cameras, by Friedhelm Hamann, from TU Berlin and the Science of Intelligence Excellence Cluster (SCIoI). Poster

- Recurrent Vision Transformers for Object Detection with Event Cameras, by Mathias Gehrig, from the University of Zurich, CVPR 2023.

- Learning to Estimate Two Dense Depths from LiDAR and Event Data, by Vincent Brebion (Université de Technologie de Compiègne), Julien Moreau and Franck Davoine, SCIA 2023. Project Page (suppl. material, poster, code, dataset, videos). PDF

- ESS: Learning Event-based Semantic Segmentation from Still Images, by Nico Messikomer, from the University of Zurich, ECCV 2022.

- Multi-event-camera Depth Estimation, by Suman Ghosh, from TU Berlin. Poster

- Event-based shape from polarization, by Manasi Muglikar, from the University of Zurich, CVPR 2023.

- All-in-focus Imaging from Event Focal Stack, by Hanyue Lou, Minggui Teng, Yixin Yang, and Boxin Shi, CVPR 2023.

- Event-aided Direct Sparse Odometry, by Javier Hidalgo-Carrió, from the University of Zurich. Poster

- High-fidelity Event-Radiance Recovery via Transient Event Frequency, by Jin Han, Yuta Asano, Boxin Shi, Yinqiang Zheng, and Imari Sato, from the University of Tokyo, NII and Peking University, CVPR 2023. Poster

Papers at the main conference (CVPR 2023)

- Adaptive Global Decay Process for Event Cameras, Code

- All-in-focus Imaging from Event Focal Stack, Video

- Data-driven Feature Tracking for Event Cameras, Video, Code

- Deep Polarization Reconstruction with PDAVIS Events, Video, Code

- Event-based Blurry Frame Interpolation under Blind Exposure, Code

- Event-Based Frame Interpolation with Ad-hoc Deblurring, Video, Code

- Event-based Shape from Polarization, Video, Code

- Event-based Video Frame Interpolation with Cross-Modal Asymmetric Bidirectional Motion Fields, Video

- Event-guided Person Re-Identification via Sparse-Dense Complementary Learning, Code

- EventNeRF: Neural Radiance Fields from a Single Colour Event Camera, Video, Project page

- EvShutter: Transforming Events for Unconstrained Rolling Shutter Correction, Video

- Frame-Event Alignment and Fusion Network for High Frame Rate Tracking, Code

- Hierarchical Neural Memory Network for Low Latency Event Processing, Video, Project page

- High-fidelity Event-Radiance Recovery via Transient Event Frequency, Video, Code

- Learning Adaptive Dense Event Stereo from the Image Domain

- Learning Event Guided High Dynamic Range Video Reconstruction, Video

- Learning Spatial-Temporal Implicit Neural Representations for Event-Guided Video Super-Resolution, Video

- Progressive Spatio-temporal Alignment for Efficient Event-based Motion Estimation, Code

- Recurrent Vision Transformers for Object Detection with Event Cameras, Video, Code

- “Seeing” Electric Network Frequency from Events, Project page

- Tangentially Elongated Gaussian Belief Propagation for Event-based Incremental Optical Flow Estimation, Video, Code

Objectives

This workshop is dedicated to event-based cameras, smart cameras, and algorithms processing data from these sensors. Event-based cameras are bio-inspired sensors with the key advantages of microsecond temporal resolution, low latency, very high dynamic range, and low power consumption. Because of these advantages, event-based cameras open frontiers that are unthinkable with standard frame-based cameras (which have been the main sensing technology for the past 60 years). These revolutionary sensors enable the design of a new class of algorithms to track a baseball in the moonlight, build a flying robot with the agility of a bee, and perform structure from motion in challenging lighting conditions and at remarkable speeds. These sensors became commercially available in 2008 and are slowly being adopted in computer vision and robotics. In recent years they have received attention from large companies, e.g., the event-sensor company Prophesee collaborated with Intel and Bosch on a high spatial resolution sensor, Samsung announced mass production of a sensor to be used on hand-held devices, and they have been used in various applications on neuromorphic chips such as IBM’s TrueNorth and Intel’s Loihi. The workshop also considers novel vision sensors, such as pixel processor arrays (PPAs), which perform massively parallel processing near the image plane. Because early vision computations are carried out on-sensor, the resulting systems have high speed and low-power consumption, enabling new embedded vision applications in areas such as robotics, AR/VR, automotive, gaming, surveillance, etc. This workshop will cover the sensing hardware, as well as the processing and learning methods needed to take advantage of the above-mentioned novel cameras.

Topics Covered

- Event-based / neuromorphic vision.

- Algorithms: motion estimation, visual odometry, SLAM, 3D reconstruction, image intensity reconstruction, optical flow estimation, recognition, feature/object detection, visual tracking, calibration, sensor fusion (video synthesis, visual-inertial odometry, etc.).

- Model-based, embedded, or learning-based approaches.

- Event-based signal processing, representation, control, bandwidth control.

- Event-based active vision, event-based sensorimotor integration.

- Event camera datasets and/or simulators.

- Applications in: robotics (navigation, manipulation, drones…), automotive, IoT, AR/VR, space science, inspection, surveillance, crowd counting, physics, biology.

- Biologically-inspired vision and smart cameras.

- Near-focal plane processing, such as pixel processor arrays (PPAs).

- Novel hardware (cameras, neuromorphic processors, etc.) and/or software platforms, such as fully event-based systems (end-to-end).

- New trends and challenges in event-based and/or biologically-inspired vision (SNNs, etc.).

- Event-based vision for computational photography.

- A longer list of related topics is available in the table of content of the List of Event-based Vision Resources

Organizers

- Guillermo Gallego, TU Berlin, Einstein Center Digital Future, Science of Intelligence Excellence Cluster (SCIoI), Germany.

- Davide Scaramuzza, University of Zurich, Switzerland.

- Kostas Daniilidis, University of Pennsylvania, USA.

- Cornelia Fermüller, University of Maryland, USA.

- Davide Migliore, Prophesee, France.

Important Dates

- Paper submission deadline:

March 20, 2023 (23:59h PST). Submission website (CMT) - Demo abstract submission:

March 20, 2023 (23:59h PST) - Notification to authors:

April 3, 2023 - Camera-ready paper:

April 14, 2023 (deadline by IEEE) Early-bird registration April 30th (23:59h ET)Standard registration begins May 1st.- Workshop day: June 19, 2023. 2nd day of CVPR. Full day workshop.

FAQs

- What is an event camera? Watch this video explanation.

- What are possible applications of event cameras? Check the TPAMI 2022 review paper.

- Where can I buy an event camera? From Inivation, Prophesee, CelePixel, Insightness.

- Are there datasets and simulators that I can play with? Yes, Dataset. Simulator. More.

- Is there any online course about event-based vision? Yes, check this course at TU Berlin.

- What is the SCAMP sensor? Read this page explanation.

- What are possible applications of the scamp sensor? Some applications can be found here.

- Where can I buy a SCAMP sensor? It is not commercially available. Contact Prof. Piotr Dudek.

- Where can I find more information? Check out this List of Event-based Vision Resources.

Upcoming Related Workshops

- Caméra à événements appliquée à la robotique, Sorbonne University, Paris (Nov. 16th, 2023). Announcement

Past Related Workshops

- 2023 Int. Image Sensor Workshop (IISW).

- MFI 2022 First Neuromorphic Event Sensor Fusion Workshop. Videos

- tinyML Neuromorphic Engineering Forum. Videos

- 2022 Telluride Neuromorphic workshop.

- 2021 Telluride Neuromorphic workshop.

- ICCV 2021 Tutorial. Introduction to Event Detection Cameras.

- CVPR 2021 Third International Workshop on Event-based Vision. Videos

- ICRA 2021 Workshop On- and Near-sensor Vision Processing, from Photons to Applications (ONSVP).

- ICRA 2020 Workshop on Sensing, Estimating and Understanding the Dynamic World. Session on Event-based camera companies iniVation and Prophesee.

- ICRA 2020 Workshop on Unconventional Sensors in Robotics. Videos

- CVPR 2019 Second International Workshop on Event-based Vision and Smart Cameras. Videos

- IROS 2018 Workshop on Unconventional Sensing and Processing for Robotic Visual Perception.

- ICRA 2017 First International Workshop on Event-based Vision. Videos

- ICRA 2015 Workshop on Innovative Sensing for Robotics, with focus on Neuromorphic Sensors.

- Event-Based Vision for High-Speed Robotics (slides) IROS 2015, Workshop on Alternative Sensing for Robot Perception.

- The Telluride Neuromorphic Cognition Engineering Workshops.

- Capo Caccia Workshops toward Cognitive Neuromorphic Engineering.

Supported by

Ack

The Microsoft CMT service was used for managing the peer-reviewing process for this conference. This service was provided for free by Microsoft and they bore all expenses, including costs for Azure cloud services as well as for software development and support.